Introduction

Building a neural network from scratch in Python is an excellent way to understand how these models function. By coding each step, you can see how neurons, layers, weights, and biases interact, providing a deeper understanding of the concepts that power machine learning. In this tutorial, we will create a simple neural network using only basic Python libraries, allowing you to follow along and code the entire process step-by-step.

This guide covers the following:

- Initializing neurons and layers

- Coding forward propagation

- Calculating loss and optimizing weights with gradient descent

- Training the network to make accurate predictions

If you’re new to neural networks, start with our introduction to neural networks and AI for a high-level overview of how they work.

Step 1: Setting Up the Python Environment

To begin, open a new Python file or Jupyter Notebook. For this tutorial, we will use only core Python and NumPy, a popular library for numerical operations.

- Install NumPy (if not already installed) by running:

pip install numpy

Step 2: Initializing the Neural Network Structure

We’ll start by defining the network structure. Our neural network will consist of an input layer, one hidden layer, and an output layer. For simplicity, we’ll create a network that can solve a binary classification task.

Define the Architecture

- Input Layer: Takes input features (e.g., two features for a simple problem)

- Hidden Layer: Contains multiple neurons that learn features from the input

- Output Layer: Produces the final prediction (binary output: 0 or 1)

# Define network structure input_size = 2 # Number of input features hidden_size = 3 # Number of neurons in the hidden layer output_size = 1 # Output (binary classification)

Initialize Weights and Biases

Randomly initializing weights and biases is crucial, as it helps break symmetry and allows the network to learn diverse features.

python# Initialize weights and biases np.random.seed(0) # For reproducibility weights_input_hidden = np.random.rand(input_size, hidden_size) bias_hidden = np.random.rand(1, hidden_size) weights_hidden_output = np.random.rand(hidden_size, output_size) bias_output = np.random.rand(1, output_size)

Step 3: Define the Activation Functions

Activation functions introduce non-linearity to the network, enabling it to model complex relationships. We will use the Sigmoid function for both the hidden and output layers.

python# Define the Sigmoid activation function

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# Define the derivative of the Sigmoid function for backpropagation

def sigmoid_derivative(x):

return x * (1 - x)

Want to go deeper into how activation functions shape AI models? Read our breakdown of activation functions and network internals.

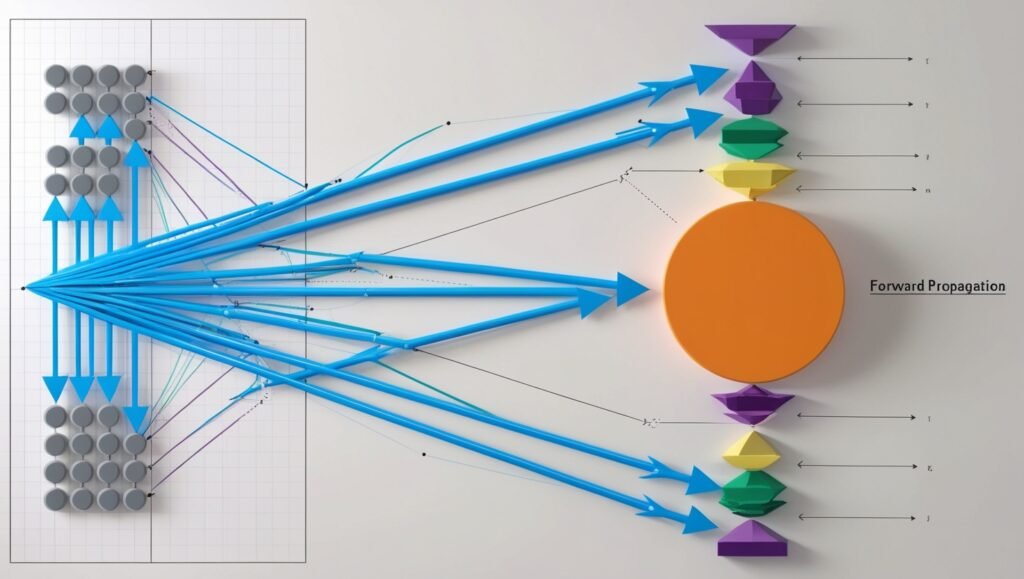

Step 4: Forward Propagation

Forward propagation is the process by which data moves through the network from the input layer to the output layer. Each neuron calculates its output by applying weights, adding a bias, and passing the result through an activation function.

python# Define forward propagation

def forward_propagation(inputs):

# Hidden layer calculations

hidden_input = np.dot(inputs, weights_input_hidden) + bias_hidden

hidden_output = sigmoid(hidden_input)

# Output layer calculations

final_input = np.dot(hidden_output, weights_hidden_output) + bias_output

final_output = sigmoid(final_input)

return hidden_output, final_output

Step 5: Define the Loss Function

The loss function measures how well the network’s predictions match the actual values. We’ll use Mean Squared Error (MSE) for simplicity.

python# Define the Mean Squared Error (MSE) loss function

def mse_loss(actual, predicted):

return np.mean((actual - predicted) ** 2)

Step 6: Backpropagation and Gradient Descent

Backpropagation is the process of calculating the gradients of the weights and biases with respect to the loss function, allowing the network to minimize error. Gradient descent is the optimization technique used to update the weights and biases in the direction that reduces the error.

python# Define the backpropagation function

def backpropagation(inputs, hidden_output, final_output, actual):

global weights_input_hidden, bias_hidden, weights_hidden_output, bias_output

# Output layer error and gradient

error_output = actual - final_output

gradient_output = error_output * sigmoid_derivative(final_output)

# Hidden layer error and gradient

error_hidden = gradient_output.dot(weights_hidden_output.T)

gradient_hidden = error_hidden * sigmoid_derivative(hidden_output)

# Update weights and biases using gradient descent

weights_hidden_output += hidden_output.T.dot(gradient_output)

bias_output += np.sum(gradient_output, axis=0, keepdims=True)

weights_input_hidden += inputs.T.dot(gradient_hidden)

bias_hidden += np.sum(gradient_hidden, axis=0, keepdims=True)

Step 7: Training the Network

Now that we have defined forward propagation, backpropagation, and gradient descent, we can train the network. Training involves iterating through forward and backward propagation over multiple epochs to minimize the loss.

python# Define the training function

def train_network(inputs, actual, epochs):

for epoch in range(epochs):

# Forward propagation

hidden_output, final_output = forward_propagation(inputs)

# Calculate and print loss every 100 epochs

loss = mse_loss(actual, final_output)

if epoch % 100 == 0:

print(f'Epoch {epoch}, Loss: {loss}')

# Backpropagation

backpropagation(inputs, hidden_output, final_output, actual)

Step 8: Testing the Network

After training, we can test the network with new data to see how well it generalizes.

python# Define a test function

def test_network(inputs):

_, final_output = forward_propagation(inputs)

return final_output

Example: Training and Testing the Network

Let’s train and test our network using a simple binary classification dataset.

python# Sample dataset

inputs = np.array([[0, 0], [0, 1], [1, 0], [1, 1]])

actual = np.array([[0], [1], [1], [0]]) # XOR logic

# Train the network

train_network(inputs, actual, epochs=1000)

# Test the network

print("Predictions after training:")

print(test_network(inputs))

Conclusion

By building a neural network from scratch, we gain insight into how each part of the network functions. From initializing weights and biases to implementing forward and backward propagation, each step helps demystify the neural network’s learning process. Although this example is simple, it lays the groundwork for building more complex models and understanding the underlying mechanisms that power artificial intelligence.

Now that you’ve built your own model, explore how neural networks are used in real-world AI finance applications.

Want to explore more hands-on AI tools? Check out our list of the top 5 free AI tools you can use right now.

4 thoughts on “Step-by-Step Guide to Building Neural Networks in Python”